ChatGPT:

🧠💻

“Your Code, My Prompt: Surviving the AI Engineer Apocalypse”

Why Software Jobs Are Changing, and How to Keep Yours

🤖 1. Large Language Models (LLMs) Can Code — Kinda

Let’s start with the sparkly headline:

“AI is writing code now! Software engineers are doomed!”

Before you panic-apply to become a wilderness guide, let’s get specific:

- LLMs like ChatGPT, Claude, and Code Llama can absolutely write code.

- Need a Python function to reverse a string? ✅

- Want to build a chatbot that translates pig Latin into SQL? ✅

- Want it to debug your flaky production issue? ❌

- These models were trained on millions of lines of public code.

- GitHub, Stack Overflow, blog posts, etc.

- Which means they’re basically very fast, very confident interns who have read everything but understood nothing.

- They’re great at patterns, not meaning.

- LLMs predict the next token, not the best solution.

- They prioritize coherence over correctness.

- So they might write a method that looks perfect, but calls a nonexistent function named fix_everything_quickly().

🔥 2. Why This Still Freaks Everyone Out

Despite their tendency to hallucinate like a sleep-deprived developer on their fourth Red Bull, people are still panicking.

Here’s why:

- They’re fast.

One engineer with ChatGPT can write, test, and deploy code at 2x or 3x their old speed — for routine stuff. - They reduce headcount.

If 10 developers used to maintain a legacy app, now 3 + one chatbot can handle it.

(The chatbot doesn’t eat snacks or file HR complaints.) - They seem smart.

If you don’t look too closely, the code looks clean, well-commented, and confident.

Only after deploying it do you realize it’s also quietly setting your database on fire.

👩💻 3. So… Are Software Engineers Getting Replaced?

Short version: Some are.

Long version: Only the ones who act like robots.

- Companies are laying off engineers while saying things like:

“We’re streamlining our workforce and integrating AI tools.”

Translation: “We fired the junior devs and now use Copilot to build login forms.” - Engineers who:

- Only write boilerplate

- Never touch architecture

- Don’t adapt or upskill

…are more likely to get replaced by a chatbot that costs $20/month.

But the software job itself? Still very much alive. It’s just mutating.

🚧 4. Hallucination Is Still a Huge Problem

Remember: these models will confidently lie to you.

- They invent functions.

- Misuse libraries.

- Cite fake documentation.

- Tell you “it’s fine” when it absolutely isn’t.

Imagine asking a junior dev for help, and they respond:

“Sure, I already fixed it. I didn’t, but I’m very confident I did.”

That’s your LLM coding partner. Looks great in a code review, until production explodes at 3 a.m.

🧠 5. “Don’t Just Learn AI — Learn to Use It Better Than the People Trying to Replace You”

This is the actual career advice people need right now — not just “learn AI,” but:

Become the engineer who knows how to use AI smartly, safely, and strategically.

Here’s how:

- Use AI to enhance, not replace, your skills.

Automate the boring stuff: tests, docstrings, regex, error messages, documentation.

Focus your real time on architecture, systems, and big-brain work. - Catch AI’s mistakes.

Don’t just copy/paste. Always test, validate, and sanity check.

Be the one who catches the hallucinated method before it costs the company 4 hours and one lawsuit. - Build tools around the tools.

Use APIs like OpenAI or Claude to create internal dev tools, bots, onboarding assistants, etc.

Be the dev who integrates AI into the team’s workflow — not just another prompt monkey. - Teach others how to use it safely.

Become the “AI-savvy dev” everyone turns to.

Teach your team how to prompt, filter, and verify. That’s job security.

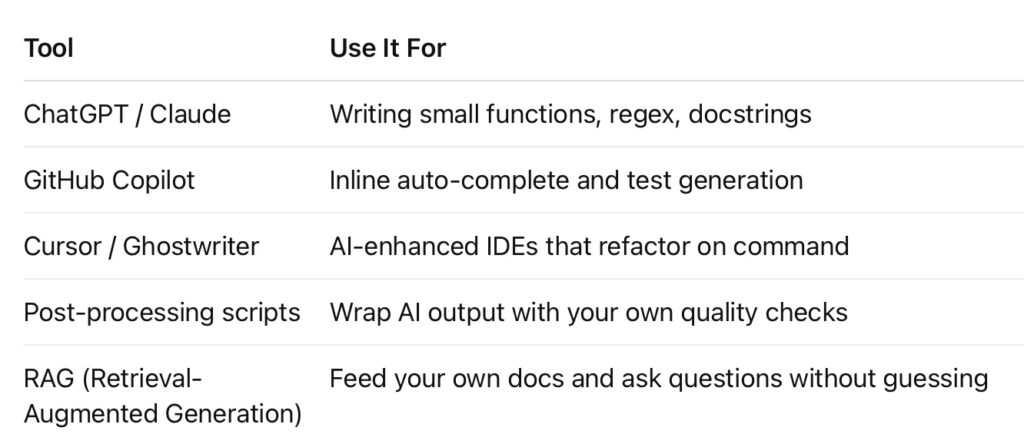

🔧 6. Tools & Tactics for Survival

🧙♂️ 7. The Future Engineer = Prompt Sorcerer + Code Wizard

Here’s the real shift happening:

Software engineers aren’t being replaced.

“Code typists” are.

The ones who thrive will:

- Use LLMs like power tools

- Know when the output is sketchy

- Integrate AI into dev pipelines

- Move from “just writing code” to designing the entire solution

📜 TL;DR (Too Logical; Didn’t Run)

- LLMs can code, but they still lie sometimes.

- Companies are using them to justify layoffs — but they still need humans who think.

- If you only “learn AI,” you’re one of a million.

- If you learn to wield it better than the people trying to automate you — you’re valuable.

Welcome to the age of human+AI engineering.

Keep your brain sharp, your prompts clean, and your rollback plan ready.