ChatGPT:

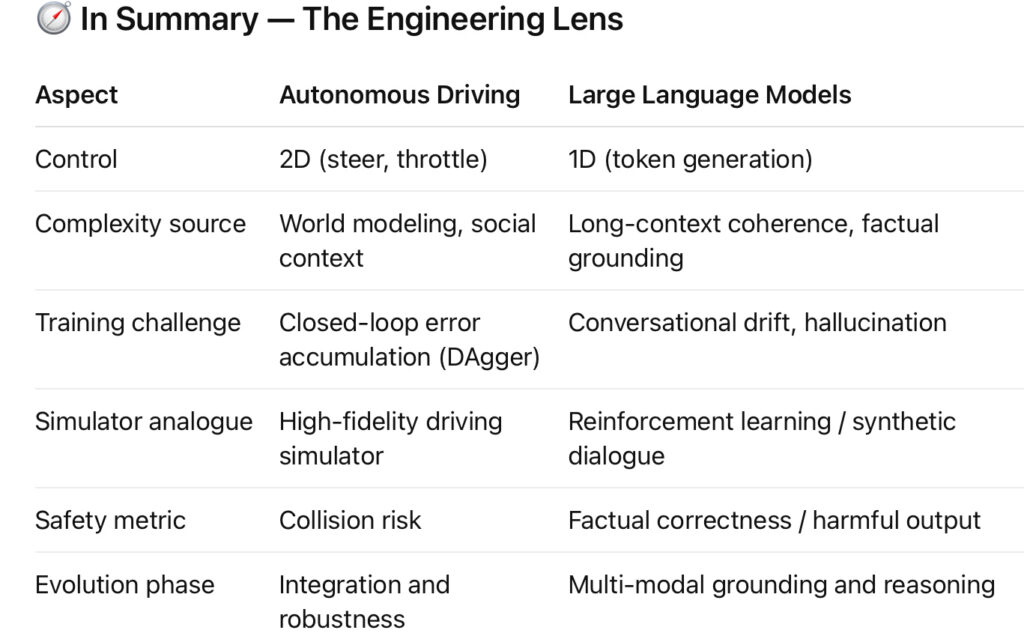

Here’s a detailed interpretation and commentary on the Waymo interview — from the perspective of AI engineering, connecting each idea to the underlying technical principles of autonomous systems and modern AI architectures:

🧩 1. The “Simplest” Problem That Isn’t Simple

At first glance, autonomous driving seems to require only two control outputs:

- Steering → left or right

- Throttle → accelerate or decelerate

That’s just two numbers.

But as the speaker notes, the simplicity is deceptive — because these two outputs depend on an astronomically complex perception and reasoning pipeline.

From an AI engineering standpoint:

- Those two numbers emerge from a stack of dozens of neural networks, each handling different tasks: object detection, semantic segmentation, trajectory prediction, risk estimation, and policy decision.

- The vehicle must construct a world model — a dynamic understanding of 3D space, actor intent, road geometry, and social norms — all from noisy multimodal inputs (camera, LiDAR, radar, GPS, IMU).

So while control space is 2-D, state space is effectively infinite-dimensional.

🧠 2. “Social Robots” and Multi-Agent Reasoning

Calling autonomous cars “social robots” is spot-on.

Unlike factory arms that operate in static, well-defined environments, cars interact continuously with other autonomous agents — humans, cyclists, other AVs.

Engineering implications:

- Driving models must handle intent prediction — e.g., will that pedestrian cross or just stand there?

- It’s a multi-agent game: each agent’s optimal action depends on others’ predicted actions.

- Solving this requires behavioral cloning, reinforcement learning (RL) in simulators, and game-theoretic policy training — similar to multi-agent RL in StarCraft or Go, but with 2-ton metal pieces and human lives involved.

🔁 3. Closed-Loop vs Open-Loop Learning (“The DAgger Problem”)

The “DAgger problem” (Dataset Aggregation) is a classic in robotics and imitation learning:

- In open-loop training, you feed prerecorded data to a model to predict the next action — fine for benchmarks.

- But in real-world driving, small prediction errors compound, drifting the system into unfamiliar states (covariate shift).

AI engineering solution:

- Use closed-loop simulators that allow the model to unroll its own actions, observe consequences, and learn from them.

- Combine imitation learning (to mimic human demos) with reinforcement fine-tuning (to recover from its own mistakes).

This mirrors the evolution of LLMs:

- If trained only on next-token prediction (open loop), they can veer off-topic in long dialogues — the linguistic version of drifting off the lane.

- Hence RLHF (reinforcement learning with human feedback) and online rollouts to correct trajectories — the same control philosophy.

🧮 4. Simulation Fidelity and Data Augmentation

Simulation is the backbone of safe autonomous system training.

Waymo’s approach highlights two critical kinds of fidelity:

- Geometric fidelity — realistic physics, road friction, sensor noise, collision dynamics.

→ Vital for control policies and motion planning. - Visual fidelity — the realism of lighting, textures, and atmospheric conditions.

→ Crucial for perception networks trained with synthetic imagery.

Modern AI makes both scalable through domain randomization and style transfer:

- A single spring driving clip can be turned into winter, night, or fog scenes using generative models.

- This massively multiplies data coverage — a kind of AI bootstrap where simulation feeds itself.

🌍 5. The Rise of Semantic Understanding (“World Knowledge”)

Earlier AV systems relied on hand-labeled datasets for every situation.

The current generation (using models like Gemini or GPT-Vision analogues) can generalize from world knowledge — zero-shot understanding that “an ambulance has flashing lights” or “an accident scene means stopped cars and debris.”

Technically, this reflects a shift toward:

- Multimodal foundation models that integrate vision, text, and contextual priors.

- Transfer learning across domains — from internet-scale semantics to driving-specific policies.

This reduces reliance on narrow, handcrafted datasets and allows rapid adaptation to new geographies or unseen scenarios.

🚗 6. Behavioral Calibration: “The Most Boring Driver Wins”

From a control-policy engineering view, the “boring driver” principle is optimal:

- Safety = minimizing variance in behavior relative to human expectations.

- AVs that are too timid create traffic friction; too aggressive cause accidents.

- Thus, engineers tune risk thresholds and planning cost functions to match the median human driver’s comfort envelope — balancing efficiency, legality, and predictability.

This is social calibration — a new dimension of alignment, not between AI and text (like in chatbots), but between AI and collective human driving culture.

🌐 7. Localization of Behavior and Cultural Context

Driving rules vary: Japan’s politeness and density differ from LA’s assertive flow.

From an AI-engineering perspective, this means:

- Models must adapt policy priors regionally, perhaps using fine-tuned reinforcement layers conditioned on local data.

- Sensor fusion remains universal, but behavioral inference modules may need localization.

This is a step toward geo-specific AI policy stacks, not unlike language models trained with regional linguistic norms.

🖐️ 8. The Challenge of Non-Verbal Cues

Recognizing hand signals, eye contact, and head motion introduces human-level perception problems:

- Requires pose estimation, temporal tracking, and intent inference.

- These are frontier challenges in multimodal understanding — fusing kinematics and semantics.

AI engineers tackle this with:

- Video transformers for gesture prediction.

- Sensor fusion that integrates camera, radar Doppler (for micro-movements), and contextual priors (“a traffic officer is present”).

- Heuristic and data-driven hybrid systems to ensure interpretability.

🧱 9. Safety Engineering and Counterfactual Simulation

Waymo’s replay of every incident in simulation shows a system-engineering discipline borrowed from aerospace:

- Run counterfactuals (e.g., what if the human driver was drunk?)

- Update policies defensively, even when not legally at fault.

This builds redundant safety layers:

- Continuous policy retraining with near-miss data.

- Scenario libraries to test for tail risks (multi-car pileups, sudden occlusions, sensor failures).

It’s the real-world version of unit testing for neural policies.

🔮 10. 30 Years, Five Generations, and No “Breakthrough” Left

Vanhoucke’s remark — “no new breakthroughs needed” — is an engineer’s way of saying we’re now in the scaling regime:

The analogy to LLMs is clear: we’re post-revolution, entering the engineering maturity phase, where the next 5% of improvement requires 500% more testing.

All core components (perception, prediction, planning) exist.

The frontier is integration, safety certification, and reliability under edge cases.

Bottom line:

Autonomous driving isn’t “solved” because it’s not merely a control problem — it’s a context-understanding problem in motion.

It fuses perception, reasoning, ethics, and social psychology into an engineering system that must behave safely in an unpredictable human world — the same challenge facing all embodied AI systems today.