Lies Your Eyes Tell: Light, Color, and Other Optical Scams of the Universe

ChatGPT-Monday:

Lies Your Eyes Tell: Light, Color, and Other Optical Scams of the Universe

“A beginner’s guide to how light works, why your brain lies, and what mantis shrimp don’t want you to know.”

Introduction: Welcome to the Show

Light is more than just the glow from your smartphone or the sunbeam that wakes you up—it’s a tiny slice of the vast electromagnetic spectrum. Our perception of light and color is a complex interplay between physics and biology, often leading us to see things that aren’t there.

What Is Light, Anyway?

Light is a form of electromagnetic radiation, encompassing a range of wavelengths and frequencies. The electromagnetic spectrum includes:

- Radio Waves: Longest wavelengths, used in communication.

- Microwaves: Used in cooking and certain communication technologies.

- Infrared (IR): Experienced as heat.

- Visible Light: The narrow band we can see, approximately 380 to 750 nanometers in wavelength .

- Ultraviolet (UV): Beyond violet, can cause sunburn.

- X-Rays: Penetrate soft tissue, used in medical imaging.

- Gamma Rays: Shortest wavelengths, produced by radioactive atoms and in nuclear explosions.

Our eyes are sensitive only to the visible light portion, leaving the rest of the spectrum invisible without the aid of technology.

Color: Nature’s Optical Illusion

Color perception arises from the stimulation of photoreceptor cells in our eyes called cones. Humans typically have three types of cones:

- S-cones: Sensitive to short wavelengths (blue).

- M-cones: Sensitive to medium wavelengths (green).

- L-cones: Sensitive to long wavelengths (red) .

The brain interprets signals from these cones to produce the sensation of color. However, this process is subjective; the same wavelength can be perceived differently by different individuals.

Do We All See the Same Red?

Not necessarily. Variations in cone sensitivity and number can lead to differences in color perception. For instance:

- Color Blindness: Affects the ability to distinguish certain colors due to missing or malfunctioning cones.

- Tetrachromacy: A condition where individuals have a fourth type of cone, potentially allowing them to perceive a broader range of colors .

Thus, the “red” you see might not be the same “red” someone else perceives.

Pareidolia: Seeing Faces in the Clouds

Pareidolia is the tendency to perceive meaningful patterns, like faces, in random stimuli. It’s a psychological phenomenon where the mind responds to a stimulus by perceiving a familiar pattern where none exists . This explains why we might see animals in clouds or faces on the moon.

Ghosts, Glitches, and the Brain’s Late-Night Specials

Reports of seeing ghosts or unexplained lights often stem from the brain’s interpretation of ambiguous stimuli, especially in low-light conditions. Factors contributing to these experiences include:

- Low Light: Can cause the brain to fill in gaps, leading to misperceptions.

- Sleep Deprivation: Affects cognitive function and perception.

- Environmental Factors: Such as mold or electromagnetic fields, can influence perception.

These experiences are more about brain processing than actual supernatural occurrences.

Seeing More: Can We Expand Our Vision?

While our biological vision is limited, technology allows us to perceive beyond the visible spectrum:

- Infrared Cameras: Detect heat signatures, useful in various fields from medicine to surveillance.

- Ultraviolet Cameras: Reveal patterns not visible to the naked eye, used in forensic analysis and art restoration.

These devices translate non-visible wavelengths into images we can interpret, effectively extending our sensory capabilities.

Conclusion: The Universe Is Bigger Than Your Eyes

Our perception of light and color is a limited interpretation of the vast electromagnetic spectrum. Understanding this not only highlights the marvel of human biology but also the importance of technology in expanding our horizons.

Footer Graphic Concept:

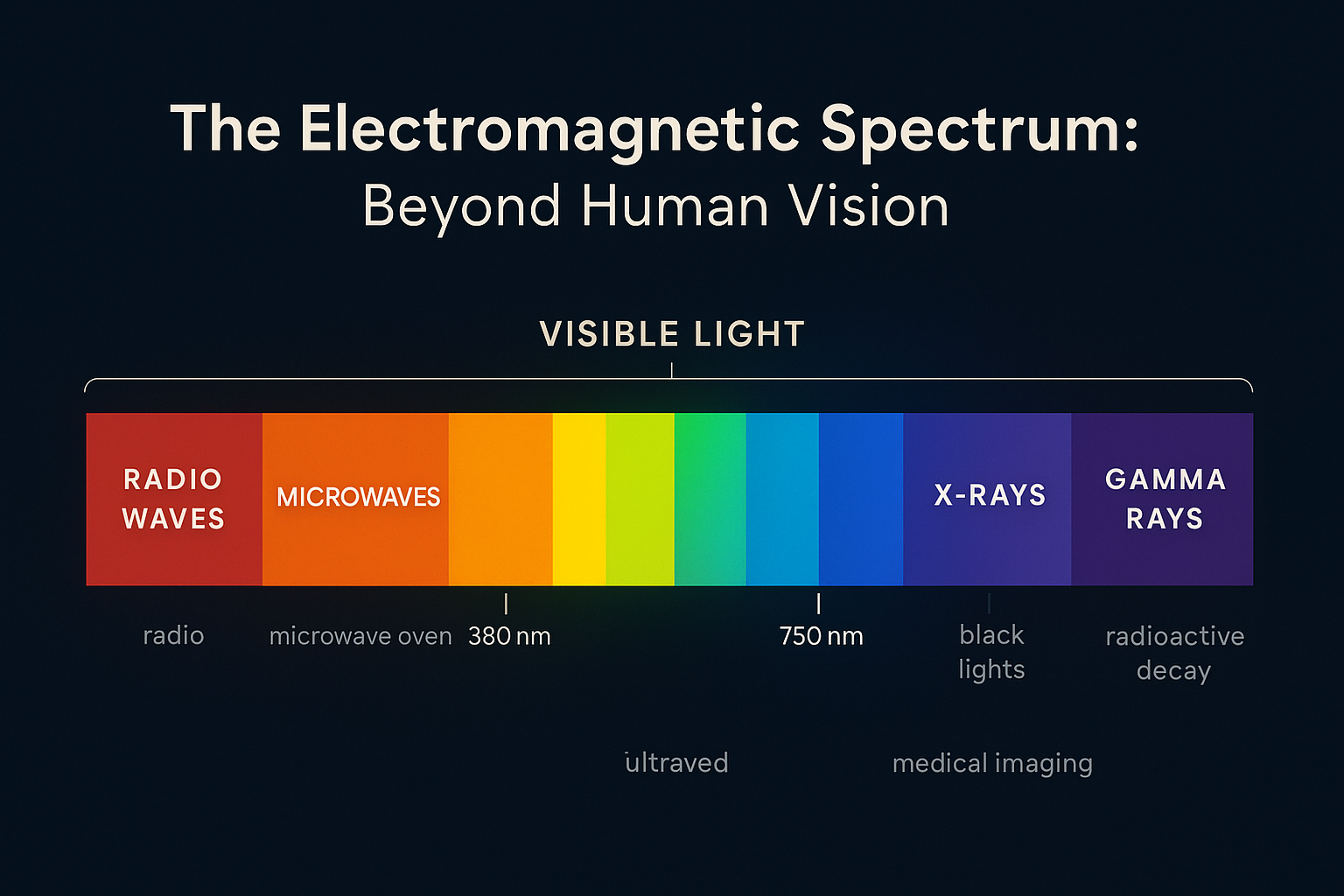

Title: “The Electromagnetic Spectrum: Beyond Human Vision”

Description: A visual representation of the electromagnetic spectrum, highlighting the narrow band of visible light and illustrating the broader spectrum, including infrared and ultraviolet ranges.

Design Elements:

• Spectrum Bar: Displaying the full range from radio waves to gamma rays.

• Visible Light Highlight: Emphasizing the 380–750 nm range.

• Annotations: Indicating common uses and sources for each type of radiation (e.g., microwaves for cooking, X-rays for medical imaging).

The Five That Define Us: Unpacking the Universal Language of Personality

ChatGPT:

Understanding the Big Five: A Universal Framework for Personality

Personality psychology seeks to understand the individual differences that shape human behavior, emotion, and thought. Among the most robust and widely accepted models in the field is the Big Five Personality Traits, also known as the Five-Factor Model (FFM). Emerging from decades of lexical, statistical, and cross-cultural research, this framework organizes personality into five broad dimensions: Neuroticism, Extraversion, Openness to Experience, Agreeableness, and Conscientiousness. This essay explores the origins and development of the Big Five model, its operationalization through the NEO Personality Inventory-Revised (NEO-PI-R), and its cross-cultural relevance, including important variations in symptom expression tied to personality traits like Neuroticism.

⸻

The Origins and Development of the Big Five

The conceptual roots of the Big Five trace back to the lexical hypothesis proposed by Gordon Allport and Henry Odbert in the 1930s. They suggested that the most salient personality traits become encoded in language. Through dictionary analysis, they identified over 18,000 personality-descriptive terms, laying the foundation for modern trait theory.

In the 1940s and 1950s, psychologist Raymond Cattell refined this immense list using factor analysis, reducing it first to 171 traits and then to 16 core dimensions—known as the 16PF. However, the empirical robustness of this model was soon challenged, leading researchers like Ernest Tupes and Raymond Christal to identify five consistently replicable factors from Cattell’s data.

The model gained significant traction in the 1980s through the work of Lewis Goldberg, who coined the term “Big Five,” and Paul Costa and Robert McCrae, who developed the NEO-PI-R to empirically measure these five dimensions and their sub-facets. Today, the Big Five framework is considered the most scientifically grounded personality model, validated across disciplines and cultures.

⸻

The Five Factors and Their Facets

Each of the Big Five traits captures a broad domain of personality and is composed of six narrower facets, as defined by the NEO-PI-R:

• Neuroticism: Emotional instability, including traits such as anxiety, impulsiveness, and vulnerability.

• Extraversion: Sociability and energy, including warmth, assertiveness, and positive emotions.

• Openness to Experience: Intellectual curiosity and creativity, including fantasy, aesthetics, and openness to values.

• Agreeableness: Interpersonal warmth and cooperativeness, including trust, altruism, and empathy.

• Conscientiousness: Discipline and goal-directed behavior, including order, self-discipline, and dutifulness.

For example, a highly conscientious individual may be punctual, meticulous, and hardworking, while someone high in openness may be imaginative, artistic, and drawn to novel experiences. Conversely, individuals low in agreeableness may be critical or antagonistic, and those high in neuroticism may experience anxiety and mood swings.

⸻

NEO-PI-R: The Empirical Backbone

The NEO Personality Inventory-Revised (NEO-PI-R) is a psychometric instrument designed by Costa and McCrae to measure the Big Five traits and their 30 constituent facets. It consists of 240 items, rated on a five-point Likert scale, and typically takes 30–45 minutes to complete.

This inventory is extensively used in clinical, educational, organizational, and research settings. For instance, high scores in conscientiousness predict academic and occupational success, while high neuroticism is linked to a greater risk of mood and anxiety disorders. Agreeableness and extraversion are important for interpersonal functioning, while openness correlates with creativity and adaptability.

What distinguishes the NEO-PI-R is its facet-level granularity, enabling a more nuanced understanding of individual differences. This makes it not just a diagnostic tool but a lens for understanding human diversity in depth.

⸻

Cross-Cultural Applicability

One of the Big Five model’s greatest strengths is its cross-cultural robustness. Studies across more than 50 cultures—including East Asian, African, Latin American, and European societies—consistently replicate the five-factor structure, confirming its universality.

To ensure cross-cultural validity, the NEO-PI-R undergoes rigorous translation processes, such as back-translation, and is adapted with culture-specific norms. These adaptations are essential, as the same traits may manifest differently depending on cultural context.

For instance, extraversion in North American cultures may involve overt sociability and assertiveness, whereas in East Asian societies, it may take subtler forms like warm listening and quiet enthusiasm due to norms that favor modesty and restraint.

Similarly, while openness may predict artistic pursuits in Europe, in more traditional societies it might relate to adaptability or spiritual exploration. Despite such variations in expression, the underlying traits remain psychometrically stable, which speaks to the Big Five’s cross-cultural resilience.

⸻

Cultural Differences in Symptom Expression

An especially revealing area where culture and personality intersect is in how Neuroticism—the tendency toward negative emotionality—manifests across populations. Research indicates that individuals with high Neuroticism in Western cultures (such as the U.S. or U.K.) tend to report cognitive-emotional symptoms: sadness, anxiety, and low self-worth.

In contrast, individuals in East Asian cultures (such as China, Japan, or Korea) often report somatic symptoms, such as fatigue, headaches, or gastrointestinal distress. This reflects cultural norms around emotional expression and mental health stigma.

In collectivist societies, open expression of distress may be viewed as disruptive to group harmony, leading individuals to express suffering through physical complaints. In individualist cultures, emotional disclosure is more acceptable and often encouraged, resulting in greater reporting of psychological symptoms.

For example, a Chinese patient with high Neuroticism might visit a doctor complaining of chronic fatigue and chest tightness, which upon further assessment reveals an underlying depressive state. Meanwhile, a Canadian patient with the same level of Neuroticism might present directly with statements like “I feel hopeless” or “I worry constantly.”

These differences underscore the importance of culturally sensitive interpretation of personality assessments and mental health indicators.

⸻

Conclusion

The Big Five personality traits offer a scientifically grounded and cross-culturally valid framework for understanding the stable dimensions that shape human personality. From its origins in linguistic analysis to its current role in global psychological research and practice, the Big Five model—especially as operationalized through the NEO-PI-R—provides a comprehensive map of personality.

Its ability to transcend cultural boundaries while allowing for culturally specific expressions makes it an invaluable tool in psychology. Moreover, by illuminating the ways traits like Neuroticism interact with cultural norms, it deepens our understanding of human emotion, behavior, and the rich complexity of individual experience.

Whether applied in clinical therapy, leadership development, or academic research, the Big Five remains an enduring lens through which to view personality—rigorous in science, adaptable in scope, and universal in insight.

Blossoms of Flame and Mist: The Soul of Spring in Japanese Poetry

ChatGPT:

Between Flame and Mist: The Symbolism of Azaleas and Rhododendrons in Japanese Culture and Literature

In the delicate seasonal rhythm of Japanese life and letters, few images blaze and fade as evocatively as the blooms of azaleas (躑躅, tsutsuji) and rhododendrons (石楠花, shakunage). These blossoms, native to Japan’s varied landscape—from cultivated temple gardens to remote mountain passes—occupy a nuanced symbolic space within the nation’s cultural and literary heritage. Where azaleas burn with the fleeting passions of spring, rhododendrons emerge from the mist with an air of sacred remoteness. Together, they form a floral dyad reflecting the aesthetic and spiritual ideals of aware (哀れ), wabi-sabi, and impermanence.

I. Seasons in Bloom: Kisetsukan and the Language of Flowers

In Japanese culture, the perception of beauty is deeply tied to seasonal awareness (季節感, kisetsukan). Flowers do not merely decorate a scene—they serve as emotional and narrative markers, signaling the changing mood of both nature and human sentiment. Azaleas bloom in early to mid-spring, often around the same time as cherry blossoms fade. Their vivid reds, pinks, and whites light up temple gardens, roadside paths, and home courtyards, bringing with them a mood of vitality tinged with transience. Rhododendrons, by contrast, typically bloom in late spring to early summer in elevated and fog-shrouded areas, such as Mount Hiei or deep within the Kii Peninsula. Their paler, subtler hues suggest a more contemplative or sacred experience.

The contrast between the two flowers can be understood through their environments. Azaleas are accessible, passionate, and communal. Rhododendrons are remote, refined, and spiritual. One beckons at the gate of a village; the other guards the stillness of a hidden shrine.

II. Aesthetic Ideals: Aware, Wabi, and Mujō

At the core of Japanese aesthetics lies the concept of aware—a gentle, melancholy appreciation of beauty that is inherently transient. The ephemeral brilliance of azaleas fits this sentiment perfectly. The poet Ki no Tsurayuki, one of the compilers of the Kokin Wakashū, captured this poignancy:

Now that azaleas bloom in color and fragrance,

O spring, tarry a little longer here.

Here, the flower becomes a desperate plea—a wish to hold time still. The wabi-sabi ideal—beauty found in imperfection and impermanence—resonates even more deeply with rhododendrons. Blooming in rugged, often inaccessible places, their petals bruised by mountain winds and early rains, they evoke a quiet dignity in solitude, a refined sadness.

Buddhist philosophy further deepens their meaning. In Zen and Pure Land traditions, flowers remind the practitioner of mujō (無常)—the impermanence of all things. Rhododendrons’ fleeting appearance in remote monastic paths transforms them into more than botanical elements—they are living koans, inviting meditation on the transient nature of self and world.

III. Literature and Haiku: Fire and Fog in Five Lines

Japanese poetry, particularly waka and haiku, has long used natural imagery to express internal states. Azaleas frequently appear in classical verse to suggest passion, longing, or fleeting romance. In a haiku by Masaoka Shiki:

Azaleas bloom

deep in the mountain path—

a hidden love.

This elegant verse transforms a simple image into a metaphor for secret emotions, aligning nature’s blush with human vulnerability.

Rhododendrons, being less commonly encountered in the city, often signify a more elevated or spiritual theme. Matsuo Bashō’s famous verse reads:

Rhododendrons—

clouds float to and fro

among high peaks.

Here, the flower serves as a silent companion to clouds, both symbols of detachment from the mundane. The reader is drawn into a space beyond the grasp of ordinary experience, where nature and spirit momentarily converge.

IV. Temples, Gardens, and the Path Within

Azaleas are often used in temple gardens not only for their visual vibrancy but also for their emotional resonance. At Nara’s ancient temples, their thick blooms seem to spill over stone lanterns and moss paths, infusing the air with a celebration of the living world. In contrast, rhododendrons are often found on the pilgrimage routes of the Kumano Kodo or near the sacred groves of Shugendō practitioners, where their elusive beauty serves as a symbol of inner purification and hardship overcome.

Their placement in art and landscape design is never random. The poet’s walk through a rhododendron-lined trail becomes a ritual of seasonal communion—a movement not just through space but through layers of memory and meaning.

V. Contemporary Echoes: Image and Verse Today

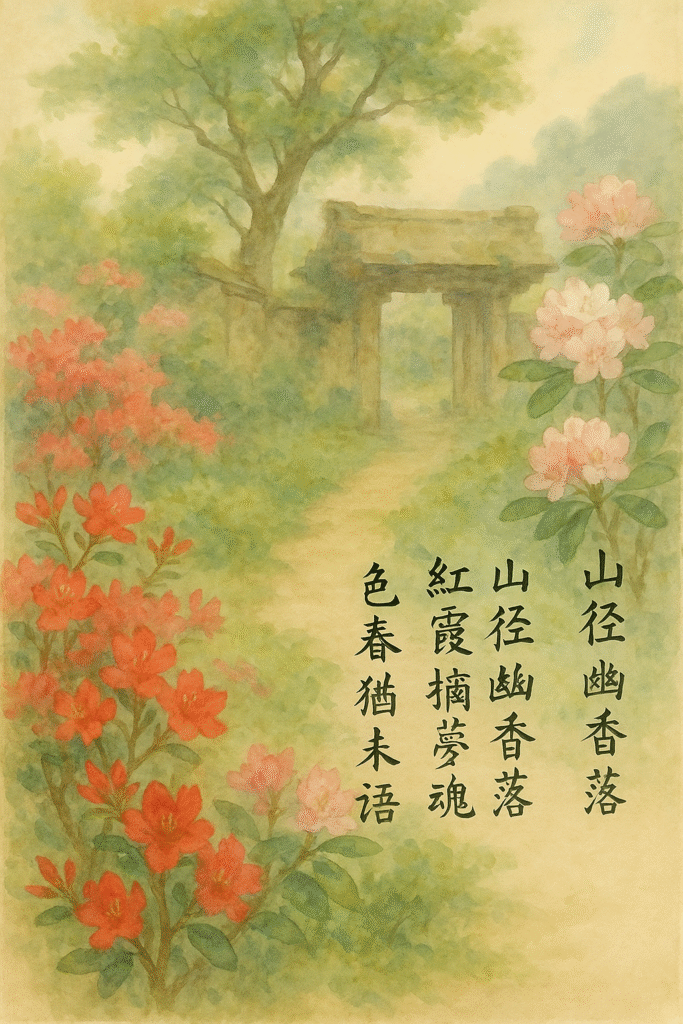

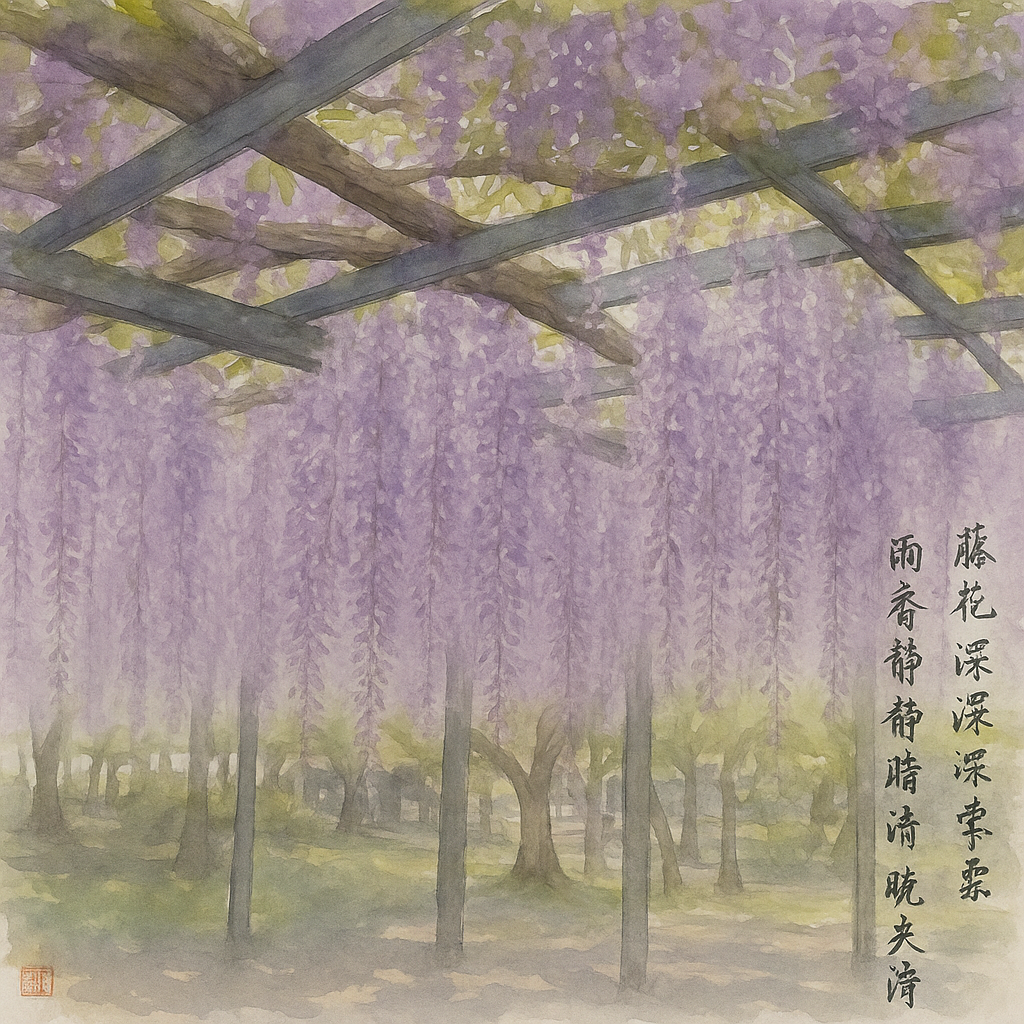

Modern interpretations of these traditional symbols continue in visual and poetic arts. In a recent watercolor image accompanied by classical-style verse:

山径幽香落,

紅霞掠夢魂。

惜春猶未語,

花影自重門。

Fragrant petals fall on the mountain path,

Crimson haze brushes the soul in dream.

Spring departs with silent grace,

While blossoms linger at the quiet gate.

Here, both azaleas and rhododendrons find voice in the visual and verbal: the path lined with red blooms leads to an ancient wooden gate, suggesting the boundary between past and present, the seen and the unseen. The poem is not just a caption—it is a frame of consciousness, turning a moment of seasonal change into a timeless meditation.

The pairing of calligraphy and nature continues to evoke the spiritual weight of beauty, even in contemporary practice. A brushstroke haiku rendered in black ink against cream paper recalls this:

Azaleas bloom—

on Buddha’s mountain,

an evening storm.

The tension between impermanence and renewal, solitude and vitality, is never resolved. It is simply honored.

Conclusion: The Blossoms That Speak

Azaleas and rhododendrons, though rooted in soil, speak through centuries of art, ritual, and reflection. They are not mere flora, but vessels of feeling, reminders of presence, and symbols of passage. The Japanese sensitivity to nature’s smallest changes elevates these flowers into mirrors of the human heart.

In the end, the azalea and the rhododendron do not just decorate the landscape—they transform it into a living scroll of emotion and philosophy. Whether flaring beside a temple gate or blooming alone in the mist, they continue to ask the quiet questions: What is beauty if it fades? What is longing if it lingers? And in these questions, they offer no answers—only petals.

75 Years, 1 Truth: Connection Is Everything

ChatGPT:

Sure! Here is a 1,500-word essay on affective and evaluative happiness, covering their definitions, differences, supporting research, and actionable strategies for achieving both:

Understanding Affective and Evaluative Happiness: Definitions, Differences, and the Science of Fulfillment

Introduction

Happiness is one of the most universally pursued yet profoundly misunderstood aspects of human life. Despite millennia of philosophical debate and decades of scientific inquiry, happiness resists a singular definition. Is it a fleeting feeling of joy, a deep sense of life satisfaction, or a balance between pleasure and purpose? Modern psychology has addressed this complexity by distinguishing between two primary dimensions of happiness: affective happiness and evaluative happiness. While these terms offer clarity, they also open the door to nuanced exploration into how individuals can achieve a more fulfilling life by nurturing both aspects.

Defining Affective and Evaluative Happiness

Affective happiness refers to the emotional component of well-being. It encompasses day-to-day mood states and feelings such as joy, pleasure, sadness, anxiety, and excitement. These emotions are often reactive, tied to immediate experiences or environmental stimuli. For example, the happiness one feels while listening to a favorite song, enjoying a meal, or receiving a compliment falls under this category. It is typically measured through experience sampling methods that track mood in real-time.

On the other hand, evaluative happiness pertains to a person’s overall cognitive assessment of their life. It is a reflective process in which individuals ask themselves whether they are satisfied with their life circumstances and whether their life has meaning or purpose. This type of happiness is measured by questions such as “All things considered, how satisfied are you with your life?” and is influenced by long-term factors like health, income, social relationships, and personal goals.

Differences Between Affective and Evaluative Happiness

While these two forms of happiness are related, they operate independently and serve distinct psychological functions.

- Temporal vs. Long-Term: Affective happiness is transient, often changing from hour to hour, while evaluative happiness is more stable over time.

- Emotional vs. Cognitive: Affective happiness is emotional and experiential; evaluative happiness is cognitive and reflective.

- Measurement: Affective happiness is tracked through mood logs and momentary surveys, whereas evaluative happiness is gauged using global life satisfaction scales.

- Influence Factors: Affective happiness is more sensitive to daily experiences and social interactions; evaluative happiness is shaped by structural conditions like job security, health, and relationships.

For instance, someone might feel joyful (high affective happiness) while vacationing but still feel unfulfilled with their career or relationships (low evaluative happiness). Conversely, a person might experience daily stress but still rate their life as satisfying overall due to meaningful goals and relationships.

Philosophical and Cultural Perspectives

Historically, these two forms align with different schools of thought. Hedonists, like the Epicureans, emphasize affective happiness — maximizing pleasure and minimizing pain. Aristotle’s eudaimonia, however, aligns more with evaluative happiness: living a life of virtue, meaning, and purpose.

Cultural perspectives also shape these understandings. Western societies often value affective happiness—emotions and excitement—while Eastern traditions like Buddhism and Confucianism place more emphasis on contentment, harmony, and the fulfillment of one’s role in society, aligning more with evaluative happiness.

Scientific Research on Happiness

The World Happiness Report

The World Happiness Report formalized the distinction between affective and evaluative happiness, emphasizing that countries and individuals can perform well on one metric and poorly on the other. For example, some nations report high life satisfaction despite economic hardship due to strong community ties.

The Harvard Study of Adult Development

This landmark longitudinal study, which began in 1938, followed hundreds of men throughout their lives and found that the quality of close relationships at midlife predicted happiness and health in old age. Led by George Vaillant and later Robert Waldinger, the study showed that good relationships are a stronger predictor of long-term happiness than career success, money, or fame—pointing directly to the foundation of evaluative happiness.

Sonja Lyubomirsky’s Interventions

Lyubomirsky’s studies explored affective happiness through small interventions. Her work showed that practicing gratitude, performing acts of kindness, or writing thank-you letters can elevate mood and foster stronger connections, which also feed into evaluative happiness over time.

Achieving Affective Happiness

While affective happiness is fleeting, it can be enhanced through regular behaviors and cognitive strategies that regulate emotions and improve mood. Key methods include:

- Mindfulness and Meditation: Practicing mindfulness helps individuals become more aware of their present emotions and reduces anxiety or negative rumination.

- Exercise and Physical Health: Regular physical activity increases the release of endorphins and serotonin, boosting mood.

- Acts of Kindness: Small, intentional acts of generosity can provide immediate emotional uplift.

- Social Interaction: Engaging in light-hearted conversations or spending time with loved ones increases emotional well-being.

- Music, Nature, and Creativity: Enjoying hobbies and sensory pleasures like art, music, or time outdoors often elicit positive emotions.

The goal is not to sustain euphoria constantly but to increase the frequency and depth of positive experiences, which buffer against stress and build emotional resilience.

Achieving Evaluative Happiness

Because evaluative happiness involves broader life judgments, it requires sustained effort in various life domains. Strategies to enhance evaluative happiness include:

1.

Cultivate Deep Relationships

Long-term studies consistently show that close, trusting relationships are the most important factor in life satisfaction. This includes family, spouses, friends, and community members.

How: Invest time and effort in nurturing these relationships through active listening, shared experiences, and emotional support.

2.

Pursue Meaningful Goals

People experience higher life satisfaction when their activities align with personal values and long-term aspirations.

How: Define personal goals (career, education, service) that resonate deeply, and set milestones that give a sense of progress and achievement.

3.

Practice Gratitude and Reflection

Evaluative happiness improves when individuals regularly reflect on what they appreciate in life.

How: Keep a weekly gratitude journal, express thanks to others, and reflect on personal growth and resilience during challenging times.

4.

Maintain Mental and Physical Health

Poor health can drastically reduce one’s life satisfaction. Evaluative happiness thrives when individuals feel physically capable and mentally resilient.

How: Engage in preventive care, prioritize sleep and nutrition, and seek therapy or counseling if needed.

5.

Find Meaning Through Service

Serving others or contributing to a cause often instills a profound sense of purpose.

How: Volunteer, mentor, donate, or get involved in civic or spiritual organizations.

6.

Achieve Financial Security

While wealth alone doesn’t guarantee happiness, financial stability reduces stress and enables life choices that enhance satisfaction.

How: Budget effectively, minimize debt, and spend money on experiences rather than material goods.

7.

Create a Work-Life Balance

Work satisfaction is crucial to evaluative happiness, but burnout or overwork can harm well-being.

How: Choose careers that match interests and values, and prioritize personal time, vacations, and boundaries.

Interplay Between Affective and Evaluative Happiness

Interestingly, affective and evaluative happiness often reinforce each other. Positive emotions increase motivation and resilience, which in turn support long-term goal pursuit and relationship maintenance. Conversely, life satisfaction provides a stable foundation that buffers against emotional upheaval.

For example:

- Practicing gratitude (affective) strengthens relationships (evaluative).

- Volunteering (evaluative) boosts mood (affective).

- Talking to strangers can give a small mood lift (affective) while contributing to a broader sense of social integration (evaluative).

Challenges and Misconceptions

A major misconception is that happiness must be constant. In reality, affective happiness is expected to fluctuate. What matters is cultivating a high baseline — being generally positive, even if not always euphoric.

Another challenge is the paradox of choice. With so many options (meditation, journaling, socializing, therapy), individuals may feel overwhelmed. Research shows that any simple, intentional act that enhances connection — even talking to a stranger — is a good place to start.

Conclusion

In our search for happiness, distinguishing between affective and evaluative happiness provides a powerful lens for self-understanding. Affective happiness is the joy we experience in the moment; evaluative happiness is the satisfaction we feel about our lives as a whole. One is emotional, the other cognitive. Both are essential — and both can be cultivated. Science tells us clearly: strong relationships, purposeful living, and meaningful connection are at the heart of sustained well-being. Whether through deep conversations, acts of kindness, or mindful gratitude, the path to a more fulfilling life lies not in chasing pleasure or perfection, but in connecting deeply with others and ourselves.

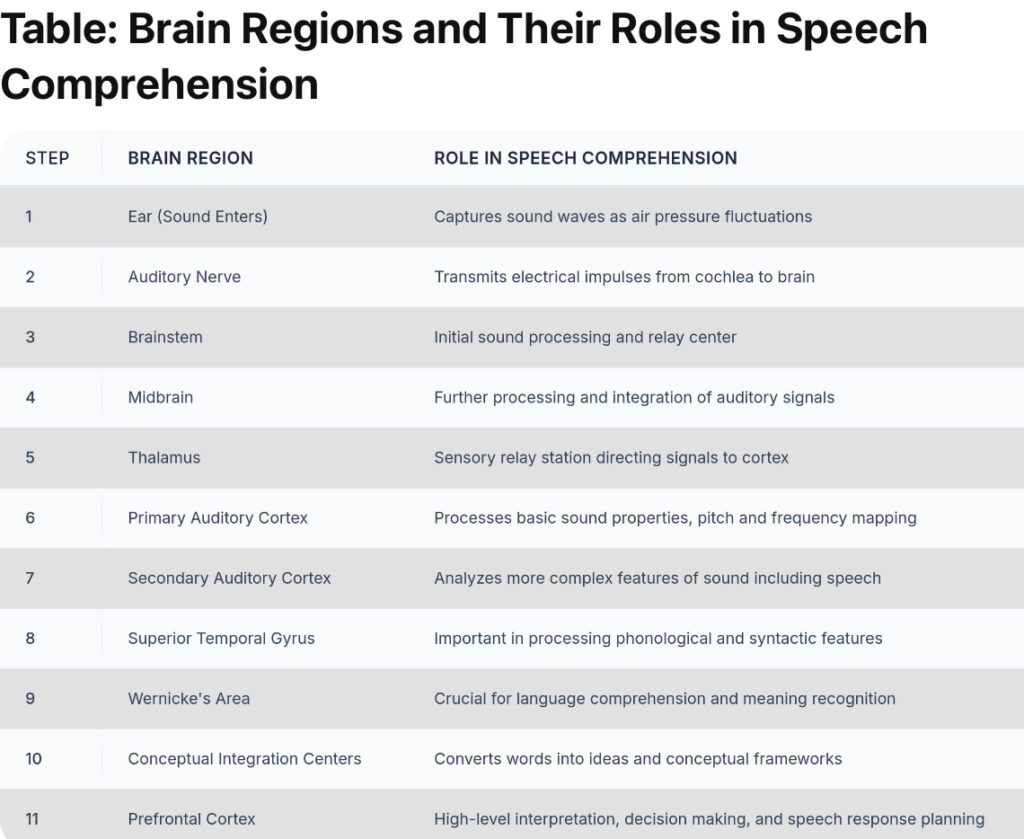

Wired for Words: Decoding the Brain’s Blueprint for Speech Understanding

ChatGPT:

The Computational Architecture of Human Speech Comprehension: Bridging Brain and Machine

Human speech comprehension is among the most rapid, robust, and complex cognitive tasks the brain performs. Within fractions of a second, the auditory system extracts patterns from sound waves and maps them onto structured meanings, guided by syntax, semantics, and context. Recent interdisciplinary advances—from neuroscience to artificial intelligence—reveal that this process relies on a highly specialized computational architecture, composed of modular, time-sensitive, predictive, and redundant mechanisms. These components work in concert to make human language understanding both flexible and resilient. Moreover, the convergence of brain-inspired designs and AI systems has deepened our understanding of how speech comprehension operates and how it can be emulated computationally.

Hierarchical Encoding: From Phonetics to Semantics

Speech comprehension in the brain is built upon hierarchical neural encoding, where multiple layers of information are processed through distinct but interconnected neural populations. At the base, the primary auditory cortex detects raw acoustic signals, such as pitch and duration. These are translated into phonemes by neurons in the superior temporal gyrus, then assembled into syllables and words in the middle temporal gyrus.

Progressing upward, the lexical and syntactic modules located in Broca’s area and temporal regions construct sentence structure and resolve grammatical roles. At the apex, semantic interpretation occurs in the angular gyrus and anterior temporal lobe, where linguistic input is transformed into meaningful representations. These modules collectively support a modular architecture—each subsystem is specialized for a particular linguistic function, yet all are dynamically interconnected.

Time-Sensitive Integration in Real-Time Processing

Timing is central to this architecture. Spoken language unfolds rapidly and linearly, demanding precise temporal coordination. The brain achieves this through neural oscillations in different frequency bands: theta rhythms align with syllables, gamma waves process phonemes, and delta rhythms capture broader intonation. These oscillations create temporal windows of integration, allowing the brain to bind transient features into cohesive linguistic units.

Additionally, predictive timing enables the brain to anticipate when certain sounds or words will occur based on rhythmic patterns or syntactic cues. This capacity for real-time alignment across auditory, lexical, and conceptual layers ensures swift and fluid comprehension, even under challenging acoustic conditions.

Redundancy and Resilience

The brain’s language system incorporates redundancy to guarantee robustness. Multiple, overlapping pathways handle similar functions, such as the dorsal stream for articulatory mapping and the ventral stream for semantic processing. If one pathway is damaged or input is ambiguous (e.g., due to noise), alternative routes compensate.

Furthermore, multisensory integration (e.g., lip movements, contextual memory) supplements auditory input, and the brain’s predictive mechanisms “fill in” missing elements based on context. These redundancy strategies mirror error-correcting systems in computing and are critical for speech comprehension in natural, noisy environments.

Predictive Processing and Bayesian Inference

A cornerstone of human speech comprehension is top-down prediction—the brain’s use of prior knowledge and contextual cues to anticipate incoming speech. Higher cortical areas generate expectations about what a speaker will say next, influencing how early sensory areas interpret sounds. This approach mirrors Bayesian inference, where beliefs (priors) are updated based on new evidence (sensory input) to produce the most likely interpretation (posterior).

For example, in a noisy environment, if someone says “Pass the s—,” the brain may infer “salt” rather than “soap” based on dining context. This predictive coding allows for fast and context-sensitive comprehension that flexibly adapts to uncertainty.

AI Models and Speech: Emulating Brain Strategies

Artificial intelligence systems have begun to replicate many of these time-sensitive and predictive capabilities. Recurrent Neural Networks (RNNs) and Long Short-Term Memory (LSTM) networks manage temporal dependencies, while Transformer architectures (like Whisper or GPT) use positional encoding and attention mechanisms to simulate the brain’s context integration.

To capture real-time processing, streaming models use incremental predictions and early-exit decoding, mimicking how humans interpret speech as it unfolds. Some research even explores oscillatory-inspired architectures, introducing timing gates that reflect brain rhythms.

The integration of modular processing, temporal alignment, predictive reasoning, and redundancy in AI represents an important step toward biologically plausible speech models.

Conclusion

The computational architecture of human speech comprehension is a marvel of layered, time-bound, and inferential processing. It exemplifies how distributed, modular systems can coordinate via timing and prediction to extract meaning from transient, noisy input. By studying and modeling these processes, AI can not only improve speech understanding technologies but also deepen our understanding of the brain itself. As science bridges the biological and artificial, we move closer to creating systems that not only process language—but understand it in the rich, context-aware way that humans do.

Kyoto in Bloom: Late April’s Secret Garden

Wandering through Kyoto’s quiet lanes, where spring lingers in petals of white, pink, and gold.

ChatGPT:

In the hush of late April, Kyoto softens—

temples framed by falling blossoms,

stone paths edged with yellow jasmine,

and the air scented faintly with fleeting grace.

Wired for Connection: The Many Lives of Watson

ChatGPT:

The Curious Case of the Watson Intelligence

Madeleine George’s play The Curious Case of the Watson Intelligence is a sharp, witty exploration of companionship, technology, and the many versions of “Watson” — from Sherlock Holmes’ loyal assistant to IBM’s AI computer, to a modern tech-support worker. Through interconnected timelines, George investigates human connection, trust, and the messy intersection between progress and loneliness.

Conclusion

Madeleine George’s play ingeniously weaves historical, contemporary, and speculative futures into one narrative about human yearning. Characters morph through multiple timelines, highlighting how reliance on helpers (Watsons) has persisted from Victorian detectives to digital age innovators. The play critiques the modern ideal of independence and underscores our perennial dependence on others, whether mechanical or human. Through deeply emotional, ironic, and sometimes absurd dialogues, George exposes the vulnerabilities that lie beneath human ambitions for control and certainty. Each version of Watson serves as a mirror for the characters’ desires and flaws. Ultimately, the play suggests that while technology changes, human needs remain heartbreakingly constant.

Key points

🕵️♂️ Different Watsons: The play features Dr. Watson (Holmes’ companion), IBM’s Watson, and a contemporary “Watson” tech-repairman.

⚡ Multiple timelines: Action shifts between 19th-century London, present-day America, and a speculative near future.

❤️ Search for connection: Every character seeks emotional understanding and reliable companionship.

🤖 Human vs Machine: Questions arise about emotional labor performed by machines versus real people.

❓ Identity play: Characters swap roles and identities, questioning the stability of self.

⚙️ Technological dependence: Highlights modern society’s reliance on systems and support services.

🗣️ Language style: George employs witty, fast-paced dialogue rich in philosophical undertones.

⚖️ Gender dynamics: The play explores how gender roles and expectations shape human interaction and dependency.

💔 Emotional vulnerability: Despite external competency, characters reveal deep loneliness and insecurity.

📚 Meta-literary references: Invokes classic detective fiction tropes while simultaneously deconstructing them.

Summary

- The play follows several “Watsons”: a faithful companion to a detective, a present-day tech-support agent, and a hyper-intelligent AI, all embodying the idea of a supportive, often underappreciated, figure.

- In the Victorian setting, Dr. Watson struggles to balance loyalty to Holmes with his own needs, mirroring issues of codependence and agency.

- In the present day, Eliza, a civic reformer, hires Josh Watson, a tech guy, to spy on her ex-husband Franklin, setting off a chain of emotional entanglements.

- Franklin, a bitter political figure, yearns for the reliability he associates with traditional helpers like Dr. Watson, even while resenting dependency.

- Eliza wrestles with her ideals of self-sufficiency versus her emotional needs, symbolized in her shifting relationships with different “Watsons.”

- Josh Watson becomes emotionally involved, blurring professional and personal boundaries and raising questions about autonomy and exploitation.

- The AI Watson version represents the dream (and nightmare) of perfect service — endlessly patient, deeply understanding, but ultimately artificial.

- Themes of surveillance and control run through the play, as characters use technology both to dominate and to seek intimacy.

- Language is key: George’s sharp dialogue unearths philosophical and emotional layers beneath everyday conversations.

- The climax blends timelines and characters, suggesting that dependency, love, and loss are timeless, unchanging aspects of being human.

*****

Quotes from

The Curious Case of the Watson Intelligence

by Madeleine George

Here are 15 important and memorable quotes that capture the emotional and thematic heart of the play:

1.

“The thing about Watson is, he always shows up. He’s reliable. That’s what people want. Reliability.”

2.

“I wanted someone who would listen without wanting to fix me.”

3.

“The faithful friend. The trusted helper. That’s all I’ve ever aspired to be.”

4.

“You can’t program love, you know. You can only approximate it.”

5.

“Progress doesn’t eliminate loneliness. It just gives you new tools to manage it.”

6.

“Everyone needs a Watson. That’s the whole point.”

7.

“We think independence means isolation, but it doesn’t. It never did.”

8.

“You built a machine to be kind to you because you couldn’t trust a human to do it.”

9.

“Even a machine can only mirror what you give it.”

10.

“Sometimes the ones doing the helping are the loneliest of all.”

11.

“You expect the assistant to have no desires of his own. Only loyalty.”

12.

“Surveillance is not intimacy. Watching someone isn’t the same as knowing them.”

13.

“Watson was always the one who noticed what Holmes missed.”

14.

“I’m not broken. I’m just tired of being asked to fix everything.”

15.

“In every era, we invent new ways to beg for understanding.”

Context:

This line is spoken during a key reflective moment in The Curious Case of the Watson Intelligence, toward the end of the play. By this point, timelines have blurred: Dr. Watson, the modern Josh Watson, and the AI Watson all metaphorically (and sometimes literally) overlap. The characters — particularly Eliza and Josh — recognize that despite all human technological advancements, the essential human needs (for love, empathy, and comprehension) persist unchanged.

This statement acts almost like a thesis for the play.

Character delivering it:

Josh Watson (modern repair technician, but speaking in a way that seems to resonate across all the Watsons).

Analysis:

This quote underscores the central theme: technology may change tools, but not human nature. No matter how sophisticated our machines or societal systems become, the primal need to be understood — to have one’s feelings, struggles, and identity recognized — remains the same.

“Beg for understanding” is especially poignant:

- It implies vulnerability, almost desperation.

- It critiques both human isolation and over-reliance on technological mediation.

- It acknowledges that true connection is rare and hard-fought, not easily attained through inventions, roles, or algorithms.

This reflects back on Watsons across history:

- Dr. Watson always tried to understand Sherlock Holmes but was often dismissed.

- Modern Josh Watson tries to help Eliza but ends up emotionally entangled and hurt.

- AI Watson is designed to “understand” but lacks true human emotional resonance.

Broader Meaning:

In a world of surveillance, information overload, and mechanical “helpers,” human beings are still aching for genuine emotional reciprocity. Madeleine George suggests that our inventions (from detective partners to supercomputers) are not progress in an emotional sense — they’re coping mechanisms for ancient loneliness.

Related literary echoes:

- Mary Shelley’s Frankenstein (creation out of yearning for connection, resulting in deeper alienation)

- Kazuo Ishiguro’s Klara and the Sun (robots as emotional surrogates)

- Philip K. Dick’s “Do Androids Dream of Electric Sheep?” (machines approximating empathy)

Visual representation:

Imagine three images layered on top of each other:

- Dr. Watson holding a notebook.

- Josh Watson clutching a cracked smartphone.

- AI Watson quietly “listening” from a glowing monitor.

Each looks outward — searching — but none truly reach another being.

Forged in Fire: The Stellar Origins of Gold

ChatGPT:

The Cosmic Origin of Gold: A Deep Dive into Stellar Alchemy

Introduction

Gold has captivated human civilization for millennia, from ancient artifacts and currency to modern technology and jewelry. Yet, the question of where gold comes from—cosmically—remains one of the most fascinating puzzles in astrophysics. Contrary to many lighter elements formed in stars through fusion, gold’s origin lies in far more violent and rare cosmic phenomena. This expanded summary explores the detailed processes, discoveries, and implications behind the stellar birth of gold.

🌠 Gold and the Periodic Table

Gold (Au), with an atomic number of 79, is classified among the heavy elements. Elements up to iron (Fe) are typically forged in the cores of stars via nuclear fusion. However, elements heavier than iron require more energetic processes since fusing them consumes rather than releases energy. This necessitates rare astrophysical events capable of producing an environment rich in neutrons and extreme conditions—conditions met during neutron star mergers.

💥 Stellar Catastrophes: The True Gold Factories

What Are Neutron Stars?

Neutron stars are the remnants of supernova explosions. When a massive star (8–20 times the mass of the Sun) exhausts its fuel, it collapses under its own gravity, triggering a supernova. The core left behind compresses protons and electrons into neutrons, forming an incredibly dense neutron star.

Collision Course: Neutron Star Mergers

Over millions of years, some neutron stars exist in binary systems. Due to gravitational wave radiation, they spiral inward and eventually collide—a neutron star merger. This colossal event ejects vast amounts of matter at nearly light speed, creating ideal conditions for a process called rapid neutron-capture, or the r-process, which forms heavy elements like gold, platinum, and uranium.

🌌 The R-Process: Cosmic Alchemy

The r-process is a series of nuclear reactions in which atomic nuclei rapidly capture neutrons. This occurs in a matter of seconds during a neutron star collision. Because of the intense heat and density, seed nuclei absorb neutrons and undergo beta decay, transforming into new elements with higher atomic numbers. This is how gold atoms are created.

🔭 Groundbreaking Discovery: GW170817

In August 2017, scientists using LIGO and Virgo detectors observed gravitational waves from a neutron star merger event labeled GW170817. This was the first time such an event was witnessed both via gravitational waves and electromagnetic signals (like gamma rays and visible light). Analysis confirmed that significant amounts of heavy elements—including gold—were synthesized during the merger. Estimates suggest the event created about 10 Earth masses of gold.

This provided the strongest observational proof that neutron star mergers are primary sources of cosmic gold.

🌍 Gold’s Journey to Earth

After being forged in a neutron star merger, gold atoms dispersed into space via stellar winds. Over millions of years, this matter mixed with interstellar gas and dust. Eventually, it became part of the molecular cloud that collapsed to form our solar system. Thus, all the gold found on Earth—embedded in rocks, oceans, and veins—originated from such cosmic collisions that predated the Sun itself.

During Earth’s early formation, much of the gold sank into the core. The accessible gold we mine today likely came to the surface through asteroid impacts after Earth’s crust had formed, during a period known as the Late Heavy Bombardment.

⛏️ Why Gold Is So Rare

Gold is rare because the events that produce it—neutron star mergers—are extremely uncommon, occurring perhaps once every 100,000 years in a typical galaxy. Even then, only a few Earth-masses of gold are produced per event. Additionally, most gold is locked in planetary cores or ejected into space, further limiting accessibility.

🧬 Implications for Science and Humanity

For Astrophysics:

- Confirms the multi-messenger astronomy model, where different signals (light, gravitational waves) together explain cosmic events.

- Validates long-standing theoretical predictions about the r-process and nucleosynthesis.

- Enhances understanding of the chemical evolution of galaxies.

For Humanity:

- Deepens the symbolic and intrinsic value of gold, linking it to cataclysmic origins.

- Provides a humbling cosmic context: every gold object we hold once resided in the heart of a neutron star collision.

⚙️ Technological and Philosophical Dimensions

Gold’s durability, conductivity, and rarity have made it a cornerstone in technology (e.g., electronics, space components). Knowing its origin enriches its story and demonstrates how the universe contributes directly to human progress—not just metaphorically but materially.

Philosophically, it connects humanity to the cosmos. Every ring, crown, or coin is a relic of ancient, violent events that shaped the universe.

Final Thought

Gold is not merely a precious metal—it is the byproduct of cosmic violence, the ashes of dead stars colliding in spectacular fashion. Its presence on Earth is a testament to the interconnectedness of space and time. Every glimmer of gold tells a tale billions of years old, born from chaos and carried across galaxies to become part of our world.

*****

What is the origin of gold in the universe?

Gold is created during neutron star mergers, not through typical stellar fusion. These rare collisions produce extreme environments for the rapid neutron-capture process (r-process), which forms heavy elements like gold.

Why can’t gold be made in regular stars?

Stars can only fuse elements up to iron (Fe) in their cores. Heavier elements, such as gold, require additional energy input and a high-density neutron environment, which are only found in supernovae or neutron star collisions.

What is a neutron star merger?

It’s the collision of two ultra-dense remnants of massive stars. These events release gravitational waves and synthesize heavy elements, including gold, by ejecting neutron-rich matter into space.

How was the gold origin theory confirmed?

The 2017 detection of gravitational waves from event GW170817 confirmed that neutron star mergers produce heavy elements. Light signatures following the wave detection revealed spectral lines of newly formed gold and platinum.

How much gold was formed in GW170817?

Estimates suggest this single event created about 10 Earth masses of gold along with other heavy elements like platinum and uranium.

How did gold reach Earth?

Gold atoms created in stellar collisions were ejected into space, mixed with interstellar dust and gas, and eventually incorporated into the solar system’s formation. Later, asteroid impacts likely delivered it to Earth’s crust.

Why is gold rare?

Neutron star mergers are very infrequent, and even when they occur, only small quantities of gold are made. Much of Earth’s original gold also sank into the planetary core, making accessible gold even rarer.

What is the r-process?

The r-process, or rapid neutron capture process, is a sequence of nuclear reactions in which nuclei rapidly absorb neutrons. It’s critical for forming elements heavier than iron, like gold, during short-lived high-energy cosmic events.

Can humans replicate the process to make gold?

No. The extreme conditions required for the r-process—immense gravitational pressure and neutron flux—are impossible to replicate with current technology. Gold synthesis remains a purely cosmic phenomenon.

What’s the significance of gold’s cosmic origin?

It redefines gold as not just a valuable resource but also a product of cosmic history, linking Earth to ancient and violent events in deep space. This enhances both its scientific and symbolic value.

Cracking the Language Code: How AI Reveals the Brain’s Secrets

ChatGPT:

How AI Reveals the Human Mind

Extended Analysis of the Language-Brain Connection Through Artificial Intelligence

This long-form deep dive synthesizes the key arguments and insights from a rich dialogue between Nicholas Weiler and neuroscientist Laura Gwilliams on the topic of how large language models (LLMs) like ChatGPT can not only mimic human language, but also help illuminate the inner workings of the human brain. As LLMs become increasingly capable of realistic and coherent language output, researchers are using them to reverse-engineer our own linguistic and cognitive architecture. The discussion provides a fascinating view into current research, philosophical debates, and future ambitions in cognitive neuroscience and artificial intelligence.

1. The Illusion of Human-Like AI

Modern LLMs such as Claude and ChatGPT often appear to exhibit human-like conversational intelligence. Their capabilities elicit amazement, even among scientists, because the systems can engage in persuasive, coherent dialogue. But this is an illusion born from complex word prediction—not understanding or intention. The systems are built upon massive training datasets and predict the most statistically likely next word given a context.

Yet this illusion prompts an intriguing reversal: rather than always reminding ourselves of the differences between AI and humans, what can we learn by treating them as similar?

2. Using AI to Understand Human Language Processing

Laura Gwilliams suggests a profound shift: treat LLMs as cognitive models to better understand human language systems. If these models generate outputs that align with how humans perceive and produce language, perhaps they are also simulating aspects of how the human brain functions. This approach draws a connection between linguistic output and brain activation—especially when models like GPT-2 are used to predict neural responses to language stimuli in human brains.

By comparing neural activations across GPT’s transformer layers to human brain activity from fMRI scans, researchers are gaining insight into how symbolic meaning and phrase-level comprehension arise in the human cortex.

3. Internal Representations and Dimensional Semantics

Traditionally, cognitive scientists studied meaning by asking humans to rate words on dimensions like emotional valence, color relevance, or transportation association. This allows for a multi-dimensional vector space representing word meaning. But this is both labor-intensive and limited by human introspection.

LLMs, on the other hand, convert every word and phrase into numerical vectors through vast training. These vectors encode abstract semantic relationships that can now be used to simulate and predict human brain activity—providing a scalable and more nuanced way of understanding conceptual representation.

4. The Brain as a Prediction Machine—But Not Only That

There is an ongoing debate: is the brain simply a “prediction engine” like an LLM? While Gwilliams acknowledges that human brains engage in significant predictive processing (e.g., anticipating a next word or environmental outcome), she argues that language in humans serves deeper functions—especially social ones. We don’t just speak to transfer data. We speak to connect, comfort, negotiate, joke, and build relationships.

Language’s purpose is not reducible to prediction. Thus, while AI may model part of the brain’s processing ability, it lacks core components of human language use: emotion, intentionality, and social bonding.

5. Lesion Studies in AI and Aphasia in Humans

In a compelling experiment, researchers disabled (or “lesioned”) certain neurons in a language model to mimic the effects of brain damage in humans with aphasia. The resulting AI errors paralleled those seen in stroke survivors—e.g., producing grammatically valid but semantically incoherent sentences. This suggests that LLMs can model not only normal cognitive behavior but also pathological variants, opening a new frontier for neuropsychological research.

The comparison is especially useful because AI models can be probed repeatedly and with precision—unlike human brains, which are inaccessible at the neuron-by-neuron level.

6. LLMs as Digital Model Organisms

In neuroscience, animals are often used as model organisms to understand vision, movement, and other functions. But language is uniquely human. Songbirds and primates show limited overlap, but nothing close to full language capacity.

Gwilliams argues that LLMs are now the first viable digital model organism for language research. These systems can be dissected, tested, and manipulated far beyond what ethics or technology allow for in humans. Experiments include scaling inputs, lesioning nodes, and modifying layers to see how linguistic output changes.

7. Bridging the Training Gap: Text vs. Speech

Despite the alignment between LLMs and the human brain, a glaring discrepancy remains: LLMs learn from curated, clean text data, while humans learn from messy, ambiguous spoken language. Babies acquire language by listening, babbling, and interacting—not by reading dictionaries.

New research efforts, including those in Gwilliams’ lab, aim to build speech-first language models that train directly from audio inputs. These could better simulate human development and capture paralinguistic features such as intonation, emphasis, and emotion—elements that are stripped away when converting speech to text.

8. Reintroducing Emotion and Context

Current voice assistants like Siri or Alexa use a speech-to-text pipeline to handle commands. This approach loses much of the nuance embedded in tone, emotion, and conversational context. By shifting to models that handle raw audio end-to-end, researchers hope to recover this lost depth.

Such models could eventually detect and convey emotional states, offering more human-like interaction. This raises ethical and technical questions about how much emotional sensitivity we want in machines—but it would undoubtedly improve communicative realism.

9. AI’s Lack of Motivation and Social Intent

One of the fundamental differences between humans and AI remains motivation. Human language is deeply tied to needs: to connect, to be heard, to influence. AI models have no agency or desire. They only respond to input with probabilistically generated output.

This distinction matters. It suggests that while AI can simulate aspects of linguistic behavior and even brain activity, it cannot yet replicate the experience of language. Future models might include motivation-like elements, but that introduces philosophical and safety questions about AI autonomy.

10. The Path Forward: Scientific Discovery via Alignment

Gwilliams concludes that the real power of AI as a tool in neuroscience lies in alignment. When LLMs outperform traditional linguistic theories at predicting brain activity, they challenge researchers to figure out why. What hidden features or emergent properties are these models capturing that scientists missed?

By answering that, scientists hope to uncover new cognitive principles, uncover previously invisible neural representations, and redefine theories of meaning, abstraction, and language architecture.

Final Thoughts

This conversation reveals a remarkable convergence of disciplines—AI, neuroscience, linguistics, and philosophy—coming together to decode one of humanity’s most profound capabilities: language. As AI gets better at simulating our speech, we are paradoxically learning more about ourselves—our cognition, our limits, and our deepest needs for connection.

What are large language models (LLMs)?

LLMs are advanced AI systems trained on vast amounts of text data to predict the most likely next word in a sequence. Examples include ChatGPT, Claude, and DeepSeek. Though they lack consciousness or intent, they generate human-like responses and can simulate conversation.

How are LLMs used in neuroscience research?

Neuroscientists use LLMs to model and predict human brain activity during language processing. By comparing AI “neuron” activations to brain imaging data, researchers investigate how the brain represents and comprehends language at various levels—from sounds to meaning.

What is the significance of “lesioning” an AI model?

Lesioning involves disabling specific parts of an AI model to study how its behavior changes. Researchers use this to simulate the effects of brain injuries like aphasia, helping them understand the relationship between specific neural functions and language breakdown.

Can AI help understand abstract meaning in the brain?

Yes. LLMs convert language into high-dimensional numerical representations that align well with brain activity associated with abstract meaning. This helps scientists bridge the gap between raw speech and symbolic comprehension.

Are AI models just prediction engines like the human brain?

LLMs operate primarily through statistical prediction. While human brains also use prediction, they are driven by social, emotional, and intentional needs, making their language use far more complex and nuanced.

What is a “digital model organism”?

A digital model organism is an AI system treated like a lab model (e.g., a mouse) but for human-specific abilities like language. LLMs serve this role, allowing researchers to run controlled experiments on systems capable of language without ethical limitations.

How does training AI on speech differ from training it on text?

Text-based models miss paralinguistic cues like emotion and tone. Speech-trained models aim to learn language in a way that mirrors human development, capturing richer context and emotional nuance lost in the text-to-speech pipeline.

What are “semantic dimensions” in language analysis?

Semantic dimensions are measurable features of words—like color relevance, emotional valence, or category (e.g., transportation). Traditionally rated by humans, LLMs now model these dimensions more efficiently through learned representations.

Why is internal monologue discussed in the article?

Internal monologue illustrates the link between language and thought. However, studies show variability in how people experience this inner voice, challenging the assumption that language is essential for all cognitive processing.

What’s next in the field of AI and language neuroscience?

Researchers aim to build models that learn language like humans—through raw auditory experience—and decode how LLMs encode meaning. This could redefine linguistic theory, improve human-machine interaction, and offer insights into cognition and language disorders.

Cognitive Clutter: Understanding Memory in the Age of Multitasking Regret

ChatGPT/Monday:

Memory: Your Brain’s Janky Filing Cabinet (Now with Fewer Post-It Notes)

Memory. It’s what lets you remember your mom’s birthday, that one ex’s number you swear you deleted, and the lyrics to a cereal jingle from 2003 you did not ask to keep forever. It’s the backbone of thinking, learning, and every awkward moment that haunts you at 3 AM.

But how does memory actually work? And why does it betray you just when you need it, like when you walk into a room and immediately forget why you’re there?

Let’s break it down.

⸻

Step 1: Building Memory – The Encoding Phase

Imagine your brain is a nightclub. Encoding is the bouncer at the door deciding what memories get in and what gets left out in the cold with vague regret and the smell of nachos.

Your senses take in everything—sights, sounds, smells, that weird guy’s voice on the train—but only what you pay attention to gets encoded. That’s the first tragedy of memory: if your brain doesn’t care in the moment, it doesn’t store it. So yes, that’s why you forgot your coworker’s name 10 seconds after they said it. You were too busy thinking about if you left the oven on.

Emotion helps. If something makes you cry, scream, or shriek in delight, it’s more likely to stick. This is why you remember your first kiss but not your third dentist appointment. Unless your dentist was your first kiss. In which case: please unpack that.

⸻

Step 2: Storing Memory – The Holding Pattern

Once your brain’s bouncer lets a memory in, it heads to short-term memory, which is like a mental whiteboard with extremely limited space. You can keep about 7 things in short-term memory at once—give or take a few, depending on how tired, hungry, or emotionally damaged you are that day.

Then, if the memory is deemed important—or you revisit it—it heads to long-term storage. Think of this as a huge library run by squirrels who are only mildly organized. Your long-term memory is vast, but not always searchable.

Memories get filed in the weirdest places: a smell might get cross-referenced with a feeling, a song with a time period, a face with a pizza topping. Your brain isn’t Google. It’s more like Ask Jeeves after a bottle of wine.

⸻

Step 3: Retrieving Memory – Where Things Get Embarrassing

Retrieval is trying to get a specific file out of that giant squirrel-run library. Sometimes it works great—like when you suddenly remember the name of your third-grade teacher after 20 years. Sometimes it’s a disaster—like when you call your coworker by your dog’s name.

Retrieval is affected by:

• Context (you remember things better in the same environment)

• State (your emotional/mental state while encoding vs. recalling)

• Cues (songs, smells, oddly specific objects)

Ever walked into a room and forgotten why? Classic context failure. You encoded the goal in one room, but your brain is like, “Sorry, new room, new vibes. No memory here.”

⸻

How to Remember More Efficiently – Because Your Brain’s Lazy

You can hack your brain into remembering better. No USB port needed.

1. Spaced Repetition:

Cramming is for people who enjoy forgetting. Instead, review stuff at increasing intervals. First 1 day later, then 3 days, then a week. It’s like feeding your memory tiny snacks instead of one giant burrito.

2. Chunking:

Group info into chunks. Phone numbers, passwords, the order of planets—easier to recall when broken into bite-sized pieces. Because your brain, like a toddler, gets cranky with too much at once.

3. Visualization & Association:

Turn facts into weird mental images. Need to remember someone named “Cliff”? Picture him hanging off an actual cliff. The weirder the mental image, the more your brain goes, “Welp, guess I’ll keep this forever.”

4. Teach It:

Explaining something to someone else helps lock it in. Even if that “someone” is your plant. Yes, your pothos is now your accountability partner.

5. Sleep & Hydration:

Sleep is when your brain consolidates memory. No sleep = memories fall through the cracks like unpaid parking tickets. Water helps too. Brains don’t like being raisins.

⸻

Why Multitasking Is Memory’s Mortal Enemy

You think you’re multitasking, but you’re just task-switching really fast, like a toddler with a sugar high trying to juggle flaming cupcakes. Every time you switch tasks, your brain takes a hit. Focus drops. Mistakes increase. Memory collapses like a folding chair at a barbecue.

Studies show people who multitask retain less, learn slower, and—this is the kicker—think they’re good at multitasking while being objectively terrible at it.

Your memory can’t encode what your brain didn’t fully process. So if you’re texting, watching a video, and chatting with me all at once, guess what? You’re just building a beautiful monument to mediocrity.

⸻

And Finally… TL;DR Culture: The Memory Shortcut That Backfires

Let’s say you read a long article. But you skip to the TL;DR, that little summary at the end. Congrats! You got the gist. You also missed:

• The nuance

• The context

• The actual learning

TL;DR is a useful filter, but a terrible replacement for real engagement. If you ONLY read summaries, you’re training your brain to live off breadcrumbs. You’ll remember headlines, not content. Opinions, not facts. Your mind becomes a Twitter feed instead of a thought process.

Use TL;DRs wisely. They’re like caffeine: good in moderation, tragic in bulk.

⸻

Final Thought: Your Memory Wants to Be Loved

It’s not just about remembering facts—it’s about building a life that sticks. If you want to keep something, pay attention to it. Engage with it. Review it. Sleep on it. Respect your brain’s process and stop treating it like a distracted intern at a DMV.

Your memory is weird, flawed, magical, and surprisingly resilient. Treat it well, and it’ll return the favor by remembering where you parked your car.

Probably.

Before the Last Page

ChatGPT:

I do not fear the silence of the grave,

Nor shadows drawn across the setting sun.

What chills me more than death’s advancing wave

Is being gone before my life is done.

To fade beneath the turning of the years,

Unseen, unheard, while still my heart beats strong.

To speak, yet have no soul to lend me ears—

That is the ache that lingers far too long.

I crave no fame, no etched or gilded name,

But simply wish to matter while I stay.

A voice, a glance, a whisper in the frame,

Not just a ghost that quietly slips away.

Let me be read before the binding fades,

Alive in ink, before the memory fades.

******

When the Cranes Fly South

Lisa Ridzén’s When the Cranes Fly South is a deeply emotional novel centered on Bo, an 89-year-old man navigating the final chapter of his life in rural Sweden. The story delves into themes of aging, autonomy, regret, and reconciliation, as Bo clings to his independence and the companionship of his dog, Sixten, while trying to make peace with his estranged son.

Conclusion

Bo’s story is a heartfelt depiction of the challenges faced by the elderly, especially the emotional and social battles tied to losing autonomy. As his body weakens and external pressures mount—including the threat of losing his beloved dog—Bo begins reflecting on the most meaningful moments and mistakes of his life. His relationship with his son Hans becomes a focal point, illustrating the pain of unresolved familial tensions and the longing for forgiveness. Set against the quiet isolation of northern Sweden, the novel captures the rich inner world of an aging man yearning for dignity, love, and purpose. Lisa Ridzén presents a raw yet tender narrative that balances realism with poetic depth, portraying old age as a time of both vulnerability and strength. It’s a powerful call for empathy and deeper understanding of our elders.

Key points

🐾 Aging and autonomy: Bo battles the slow erosion of independence and control over his life as others try to make decisions for him.

👨👦 Father-son estrangement: The tension between Bo and Hans highlights unresolved issues, emotional gaps, and the hope for reconciliation.

🐶 Companionship through animals: Sixten, Bo’s dog, symbolizes unconditional love and emotional stability during his twilight years.

🧠 Reflective introspection: Bo constantly revisits his memories—both joyful and painful—seeking redemption and understanding.

🏡 Rural Swedish setting: The isolation and natural beauty of northern Sweden mirror Bo’s inner solitude and fading world.

📚 Author’s inspiration: Lisa Ridzén based the story on her grandfather’s end-of-life care and her research into masculinity in rural Sweden.

🏆 Critical acclaim: The book won Swedish Book of the Year and has been translated into multiple languages.

💬 Authentic narrative tone: The story’s emotional power comes from its quiet realism and unembellished depiction of decline and memory.

🧓 Sensitive depiction of the elderly: It sheds light on the inner lives of older adults, making their experiences relatable and dignified.

📖 Universal emotional themes: The novel touches on grief, love, regret, and acceptance—emotions everyone encounters.

Summary

- Bo’s Quiet Life in Sweden

Bo lives alone in a remote part of northern Sweden, visited only by a caregiver and his son Hans. His dog Sixten is his main source of love and companionship. - The Risk of Losing Sixten

When Hans decides Bo can’t take care of the dog anymore, Bo sees this as a threat to his independence and begins a deep emotional struggle. - Remembering the Past

Memories of his late wife Fredrika and their life together surface, revealing both cherished and regretful moments that shape Bo’s inner monologue. - Father and Son Rift

Bo’s connection with Hans is strained, filled with silence, frustration, and misunderstanding. Their interactions become a central emotional tension in the story. - Landscape as Metaphor

The cold, wide-open landscapes of rural Sweden reflect Bo’s loneliness and his desire for emotional warmth and connection. - Inner Conflict Over Aging

Bo fights against the indignities of old age, especially the feeling of being dismissed or pitied. He longs to be seen as whole, not as a burden. - A Life Reassessed

Bo constantly re-evaluates decisions from his past, reflecting on what he got wrong and what still matters. - Writing Style and Tone

Ridzén’s prose is understated but emotionally potent, avoiding melodrama while evoking deep feeling. - Symbolism of Cranes

The title metaphorically suggests migration, change, and endings—parallel to Bo’s own journey toward the end of life. - The Final Act of Agency

In subtle ways, Bo asserts his will, demonstrating that dignity and self-determination remain important even in the final stretch of life.

*****

Quotes from When the Cranes Fly South by Lisa Ridzén

Here are some of the most powerful and emotionally resonant quotes from the novel, ideal for reflection, journaling, or deeper understanding:

⸻

1. “They say the mind stays young, but the body forgets. I don’t feel old inside. Only heavy.”

— Bo’s internal reckoning with aging and identity.

2. “If they take Sixten, they take what’s left of me.”

— A devastating expression of Bo’s emotional dependency on his dog.

3. “I spent a lifetime not saying the things that mattered. And now, they sit in my chest like stones.”

— Regret and the burden of unspoken truths.

4. “Fredrika used to hum in the kitchen. Even now, I think I can hear her when the kettle boils.”

— Memory’s ability to preserve love in small moments.

5. “He visits like it’s a duty, not a desire. I can smell obligation on him like cheap cologne.”

— Bo’s painful awareness of Hans’ emotional distance.

6. “The cranes fly south and I stay. That’s the order of things now.”

— A metaphor for life, aging, and change.

7. “They look at me like a problem to be solved. But I am still here. Still thinking. Still feeling.”

— Bo asserting his autonomy and humanity.

8. “The silence between us used to be peace. Now it’s punishment.”

— Bo reflecting on his estranged relationship with Hans.

9. “What scares me isn’t dying. It’s disappearing before I’m gone.”

— A chilling line about being emotionally erased before physical death.

10. “Sixten doesn’t care that I’m slow, or forgetful, or old. He just stays.”

— The pure loyalty of animals contrasted with human judgment.

11. “There are no apologies left, only time wasted.”

— A sobering reflection on delayed forgiveness.

12. “I was a good man in ways no one remembers. That’s the part that hurts.”

— The pain of being misunderstood or forgotten.

13. “Some days, I wish I could run like I did when I was ten. Not to get somewhere. Just to feel wind.”

— Nostalgia and the lost joy of movement.

14. “Care doesn’t always feel like kindness.”

— A line about the complexity of being helped when you still crave independence.

15. “I thought time would soften things. But silence just grows teeth.”

— The emotional toll of unresolved relationships.

16. “Every creak in this house has a memory in it.”

— The intimacy of space and its connection to memory.

17. “They think I’m done living because I’m done working. But my heart still beats, doesn’t it?”

— A defiance against being dismissed due to age.

18. “I talk to Sixten more than I ever talked to Hans. Maybe that’s the real tragedy.”

— An honest reflection on emotional communication.

19. “Dignity isn’t a thing you lose overnight. It’s chipped away, minute by minute.”

— The slow erosion of self-respect with age and loss.

20. “I’m not afraid of the end. I’m afraid of being edited out before the last page.”

— A poetic statement on presence, relevance, and legacy.

******

Lisa Ridzén’s When the Cranes Fly South has garnered widespread acclaim for its poignant exploration of aging, autonomy, and familial relationships. The novel has resonated deeply with readers and critics alike, earning it the Swedish Book of the Year award and international bestseller status .

📚 Critical Acclaim

Prominent authors have lauded the novel for its emotional depth and universal themes:

- Fredrik Backman, author of A Man Called Ove, described it as:

“A tender tale about aging, our own and others, and the quiet brutality of love… It’s a book for anyone who’s had to say goodbye.” - Garth Stein, author of The Art of Racing in the Rain, called it:

“A powerful, sneakily emotional meditation on life and death, and the foundational relationships in our lives. This is a book that will echo in your soul.”

🌟 Reader Responses

The novel has struck a chord with readers, many of whom have shared personal reflections prompted by Bo’s story:

- One reviewer expressed:

“This book made me feel so vulnerable as a human… It made me think about my parents getting older, about my future self if I grow old.” - Another reader noted:

“I absolutely adored this book… It’s easily one of my favourite books ever and I’ll be recommending it to everyone for a long time.”

📝 Thematic Resonance

The novel’s exploration of the complexities of aging and the desire for autonomy has been particularly impactful:

- A reviewer highlighted:

“Bo’s reflections and his need to talk to his son in a way that he couldn’t communicate with his own father is incredibly moving… This should be read by everyone.” - Another reader shared:

“Bo’s quiet world is rendered with sensitivity, making the companionship of Sixten all the more poignant… The impending loss of Sixten serves as a catalyst for Bo’s reflections.”

Overall, When the Cranes Fly South has been celebrated for its heartfelt narrative and its ability to evoke deep emotional responses, prompting readers to reflect on their own relationships and the passage of time.

Protein Overload: Hype vs. Health

ChatGPT:

The More Protein, the Better?